The Resonance Atlas

Status: Design + Prototyping

Status: Design + Prototyping

The Resonance Atlas is a research and data-structuring platform designed to bring coherence to fragmented human records. Across science, history, anthropology, psychology, and personal testimony, vast amounts of information exist in isolation. These records are difficult to compare, difficult to validate, and often impossible to analyze as a system. The Resonance Atlas addresses this by providing a shared structural framework that allows disparate sources to be examined together.

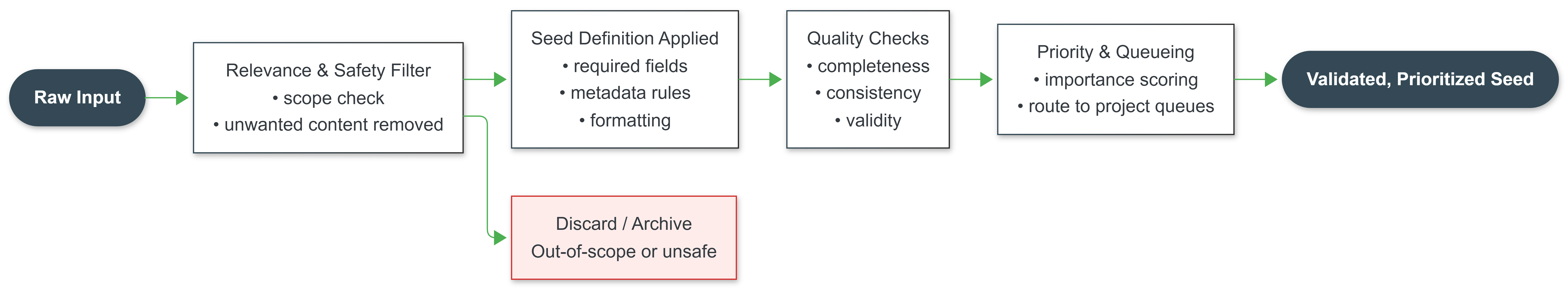

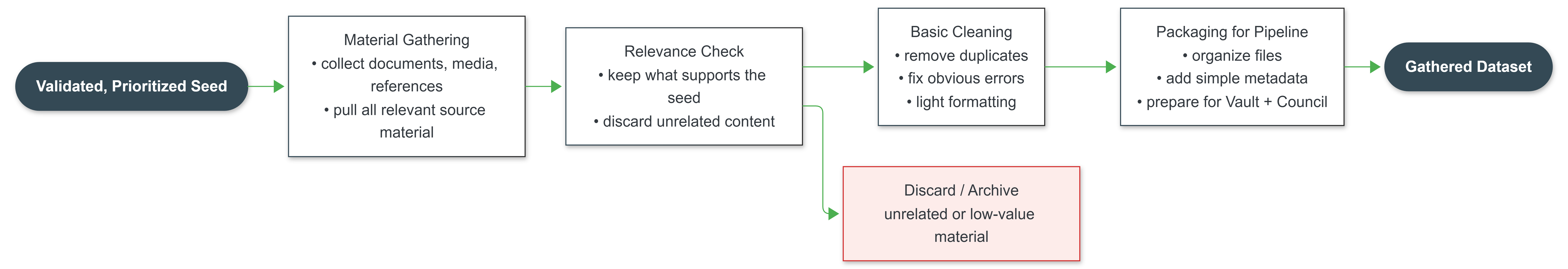

Information enters the Atlas as seeds. Each seed represents a discrete unit of source material and is validated, enriched with metadata, embedded, and stored. Once indexed, the same seed can be viewed through multiple disciplinary lenses without altering the underlying facts. This enables parallel analysis by historians, anthropologists, scientists, and behavioral researchers while preserving traceability to original sources.

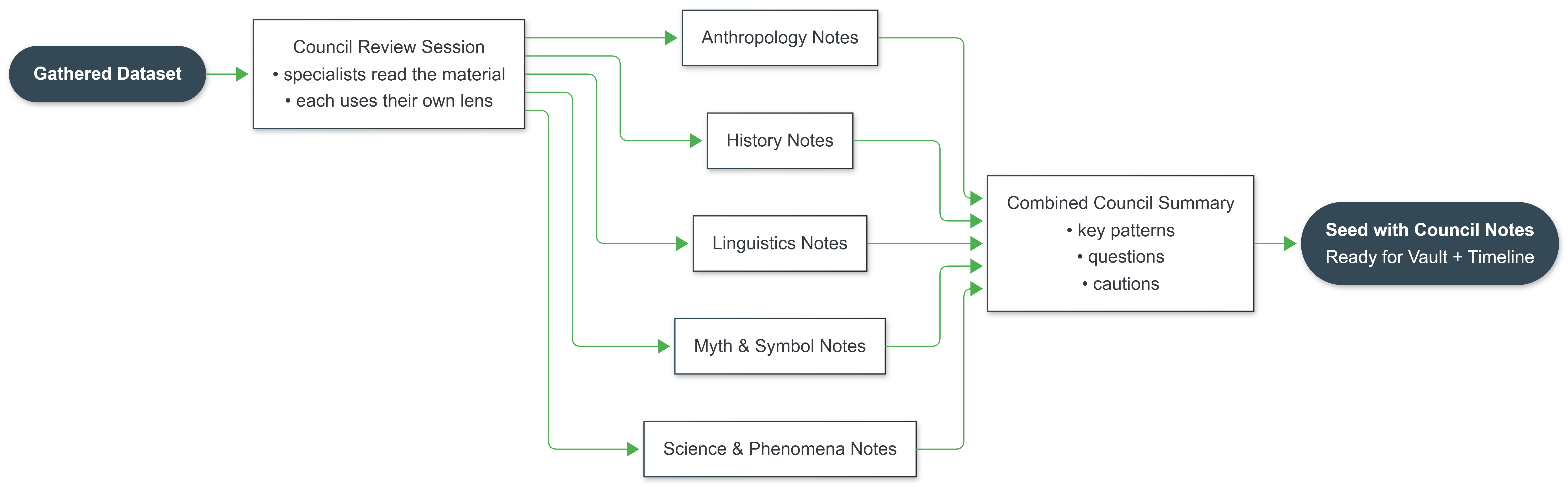

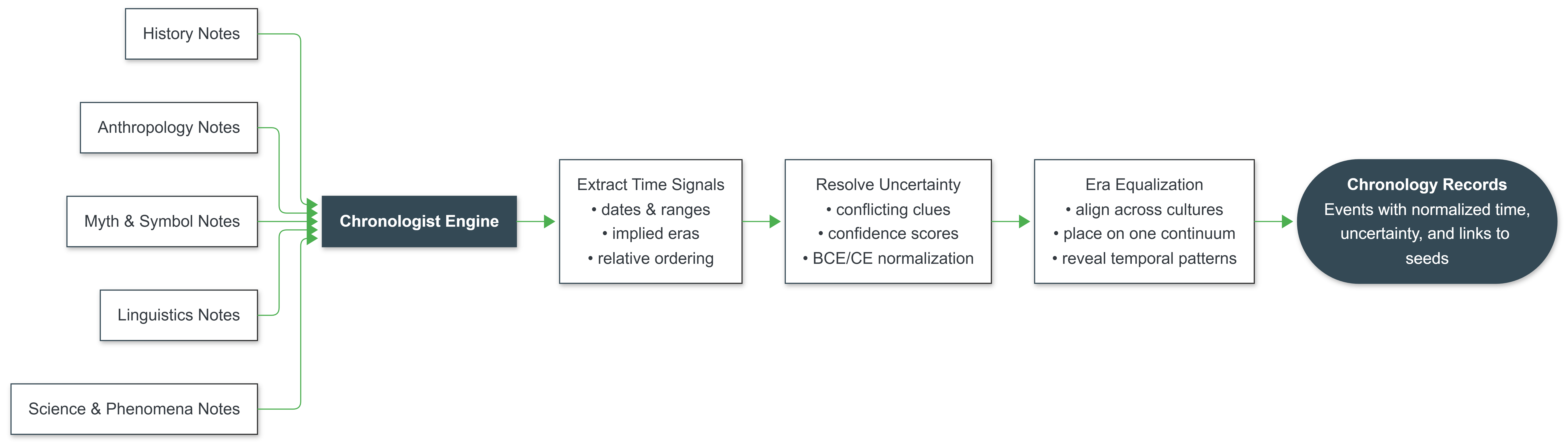

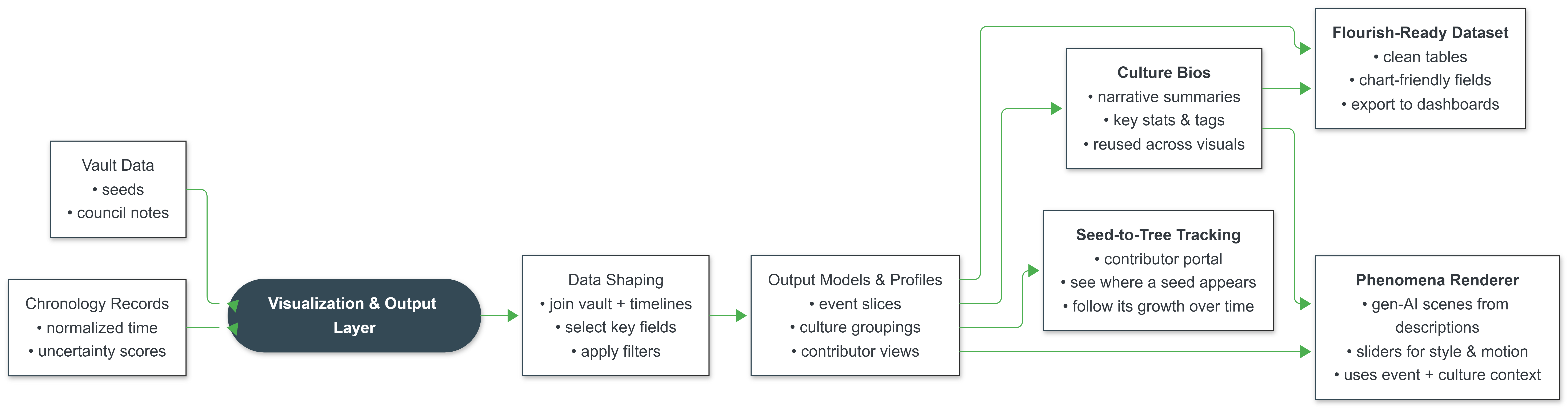

The platform treats meaning as an emergent property of structured data rather than as a fixed interpretation. Seeds move through a defined pipeline: Gatherers collect and normalize inputs, the Vault anchors them as canonical records, the Council provides domain-specific interpretation without overriding source material, and the Librarian enforces consistency and retrieval integrity. Visualization layers expose patterns, distributions, and anomalies directly, allowing users to observe behavior in the data rather than consume predefined narratives.

As the dataset grows, the system enables new forms of comparative analysis. Researchers can examine patterns across cultures, eras, and environments. Historians can test recurring motifs without relying on isolated case studies. Scientists can treat long-form historical or testimonial records as observational data rather than anecdote. Individuals can explore their own experiences in context, without reinforcement loops or prescriptive explanations.

The Resonance Atlas is not designed to explain unresolved questions or promote conclusions. Its purpose is to make complex bodies of information legible. By structuring inputs consistently and preserving provenance, patterns become visible, outliers become measurable, and interpretation becomes a layer applied after the data is understood. Over time, as more seeds are added, the system sharpens, enabling clearer analysis and more reliable insight.